Writing higher order multiple choice questions

| by Cynthia J. Brame | Print Version |

| Cite this guide: Brame, C. (2022). Writing higher order multiple choice questions. Vanderbilt University Center for Teaching. Retrieved [todaysdate] from https://cft.vanderbilt.edu/writing-higher-order-multiple-choice-questions/. |

When we write tests, we typically want to assess a range of things students should be able to do as a result of instruction. We often want to determine if they remember important elements of the content, but we also want to know if they can use these elements to understand and solve problems (whatever a “problem” may look like in our own discipline). It’s relatively straightforward to write multiple choice questions to determine whether students remember what we want them to remember (and these guidelines can help us do it in a valid and reliable way), but it can be more challenging to write questions that test those higher order skills of being able to use the information. This guide offers six suggestions for writing higher order multiple choice questions.

- Use higher order Bloom’s categories and associated verbs.

- Use specific examples that represent how your students will use the information.

- Option 1: Ask students to interpret and use visuals to make predictions or draw conclusions.

- Ask students to make choices based on scenarios or cases.

- Ask students to identify the rule or concept in an example.

- Have students choose answers that represent their reasoning as well as the correct answer.

- Require multi-logical thinking

- Use multiple T/F

- Use question clusters

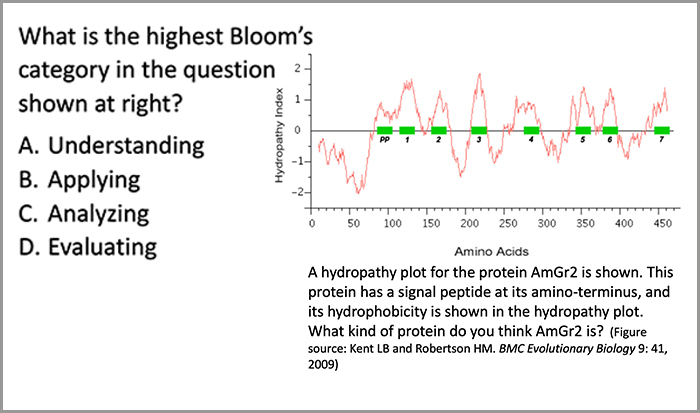

Strategy 1: Use higher order Bloom’s categories and associated verbs

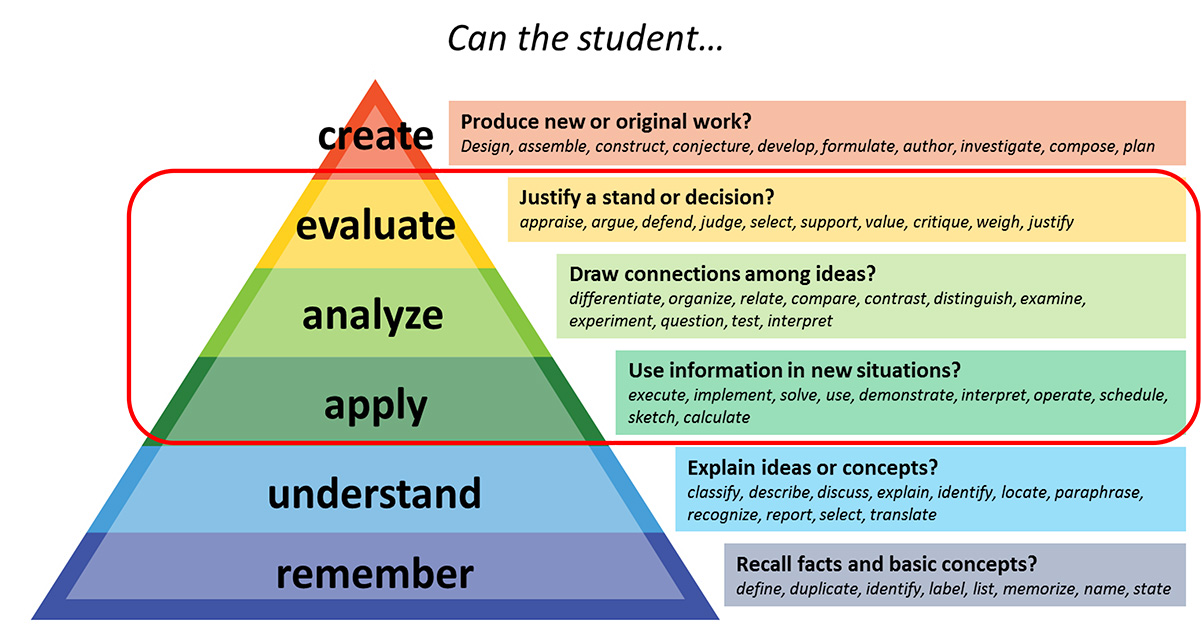

Bloom’s taxonomy is a framework for categorizing educational goals. It describes six levels of cognitive outcomes, often presented in a pyramid.

Remembering is considered the most basic of the things we might ask our students to do; at this level, we might ask whether a student can recall facts and basic concepts. Above remembering is understanding, where you might ask whether a student can explain ideas or concepts. The next level is applying, where we ask whether the student can use information in new situations. According to Bloom’s, the category above applying is analyzing, where we are asking if the student can draw connections among ideas. The next level, and the last one that we can really measure with MC questions, is evaluating, where we ask whether students can justify a stand or decision. And finally, creating is considered the most advanced type of cognitive outcome in Bloom’s; here, we would ask if students can produce new or original work.

Generally, the categories above understand are considered higher order cognitive levels, and the “apply,” “analyze,” and “evaluate” categories can reasonably be assessed with multiple choice options.

The descriptions of the categories, and the ones that are considered higher order, are useful, but they may just help us name what we already know we want our students to do. The magic comes with the associated verbs. In the figure shown, we have about ten verbs per categories…but if you google Bloom’s verbs, you’ll find charts with many, many more. These verbs can be inspirational as you think about how to ask questions that determine whether you students can do the sort of application, analysis, or evaluation that is appropriate for your teaching.

For example, the two questions below require students to apply their understanding to a specific example and thus ask students to do some higher order thinking.

Strategy 2: Use specific examples that represent how your students will use the information.

Asking your students to illustrate their ability to use your content in specific examples or scenarios also allows you to test students’ higher order thinking. There are several distinct ways you can do this.

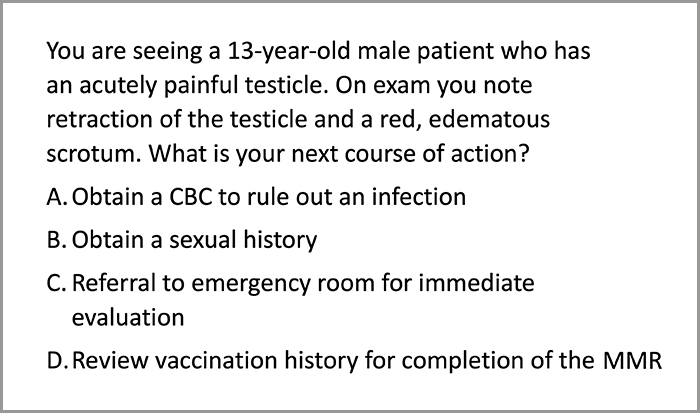

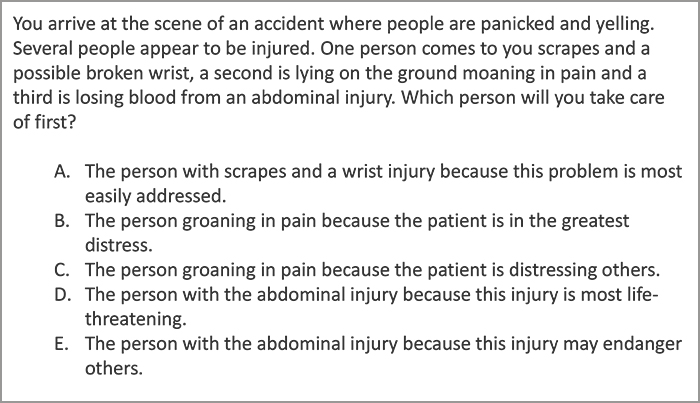

Option 1: Ask students to make choices based on scenarios or cases. Clinical vignettes provide a good example of how to do this; Figure 4 provides a very brief example.

In writing these types of scenarios, we should ask students to make choices that mimic how they would use their knowledge in a real-world setting. They should be an appropriate level of complexity, testing how they should be able to use the information at this point in their educational progression. We should also avoid pointing to “red herrings,” or purposely misleading information. Finally, we should focus on problems students are likely to see rather than rare exceptions, a guideline often described with the analogy, “When you hear galloping, think of horses, not zebras.”

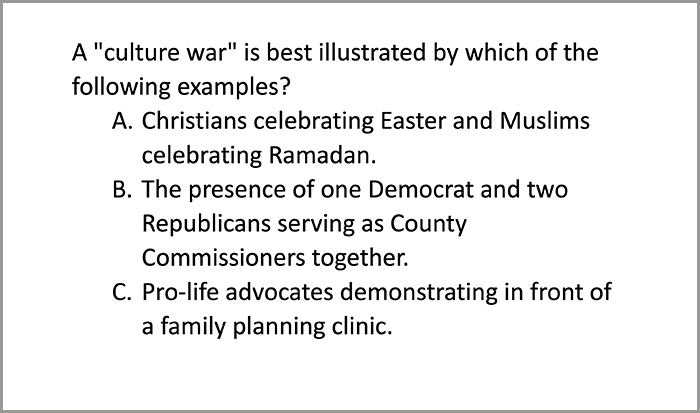

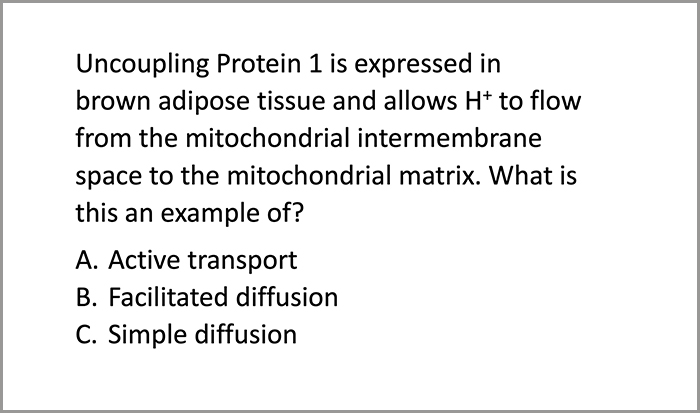

Option 2: Ask students to identify the rule or concept in an example. Examples are shown in Figures 5 and 6.

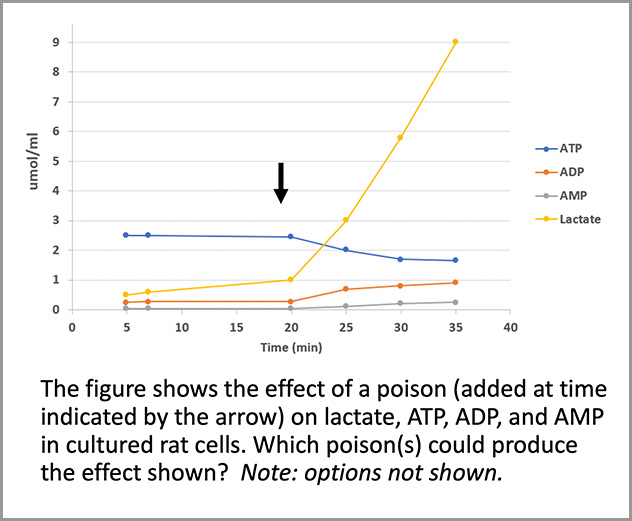

Option 3: Ask students to interpret and use visuals to make predictions or draw conclusions. Figure 7 provides one example. Of course, the visuals you use will be discipline-specific; while this example is appropriate for a biochemistry course, other types of visuals would be much more appropriate stimuli in other disciplines.

Strategy 3: Have students choose answers that represent their reasoning as well as the correct answer.

Figure 8 presents an example, where the alternatives represents three possible choices, with competing reasons for the different choices. The test-taker therefore has to evaluate whether the reason is accurate and justifies the choice. If the options are plausible, this can be pretty challenging and require higher order thinking.

Strategy 4: Require multi-logical thinking.

Strategy 4 is to require multi-logical thinking, which means “thinking that requires knowledge of more than one fact to logically and systematically apply concepts to a …problem” (Morrison and Free, 2001). Figure 7 illustrates this idea well. For students to be able to choose a poison that would have these effects, they would 1) have to identify the effect on ATP, 2) identify the effect on lactate, 3) consider whether the poison option would have this effect on ATP, and 4) determine whether the poison option would have this effect on lactate.

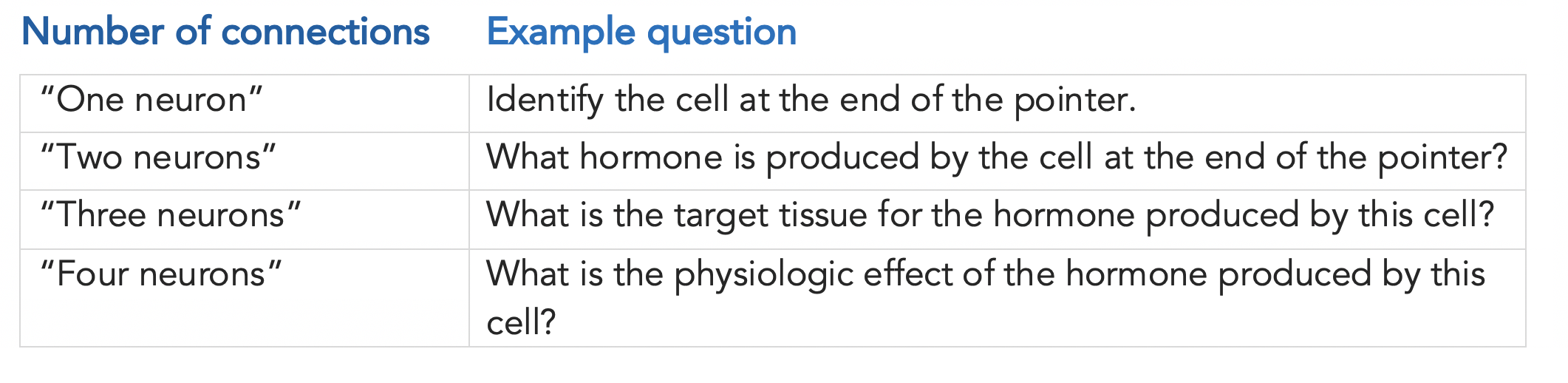

Robert Burns refers to this as “multiple neuron” thinking and provides a useful illustration (Burns, 2010):

Strategy 5: Use multiple T/F.

Multiple true/false items can help prevent students from using partial knowledge to identify the correct response. They can therefore reveal students’ partial knowledge and help us understand the nuance of what our students have learned and what they’re still working on.

Strategy 6: Use question clusters.

One downside of higher order test questions is that they are harder to write and often take longer for students to answer. Question clusters, or sets of questions that draw on the same dataset or scenario, can help mitigate these effects. By having several questions that draw from a single dataset or scenario, we can reduce cognitive load for the test-taker –and we can reduce the challenge of question writing for ourselves.

While this guide presents these ideas as six distinct strategies for writing higher order multiple choice questions, there is overlap among some of the strategies (e.g., using higher order Bloom’s categories has a great deal of overlap with using multi-logical thinking). Further, it can be advantageous to use some of these ideas in combination, such as using a question cluster that presents a single dataset and then asks one multiple T/F question and another question where students must select their reasoning. The goal, of course, is to develop assessments that address your learning objectives, providing you and your students with a tool to determine how they’re progressing.

A final note: while the individual questions matter a lot, the cornerstone of a good exam is a good plan for how the points are distributed across content areas and types of thinking. Be sure to start your exam writing with a test blueprint that will help ensure you stay on track with the questions you ask.

References and other resources

Burns, E.R. (2010). “Anatomizing” reversed: Use of examination questions that foster use of higher order learning skills by students. Anatomical Science Education, 3 (6) 330-334.

Case, Susan & Swanson, David. (2002). Constructing Written Test Questions For the Basic and Clinical Sciences. National Board of Examiners.

Morrison, Susan and Free, Kathleen. Writing multiple-choice test items that promote and measure critical thinking. Journal of Nursing Education 40: 17-24, 2001.

.

.

.

.

This teaching guide is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

.

.

.

.